6.5: A Repository of Logical Fallacies

- Last updated

- Save as PDF

- Page ID

- 13162

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

Below is a list of informal fallacies, divided into four main categories: fallacies of irrelevance, presumption, ambiguity, and inconsistency. While this list is by no means exhaustive, it will include some of the most common fallacies used by writers and speakers, both in the world and in the classroom.

Fallacies of Irrelevance

One of the most common ways to go off track in an argument is to bring up irrelevant information or ideas. They are grouped here into two main categories: the red herring fallacies and the irrelevant appeals.

- Red Herring Fallacies —These aim to distract the reader by introducing irrelevant ideas or information. They divert attention away from the validity, soundness, and support of an argument. Think of red herrings as squirrels to a dog—almost impossible to resist chasing once spotted.

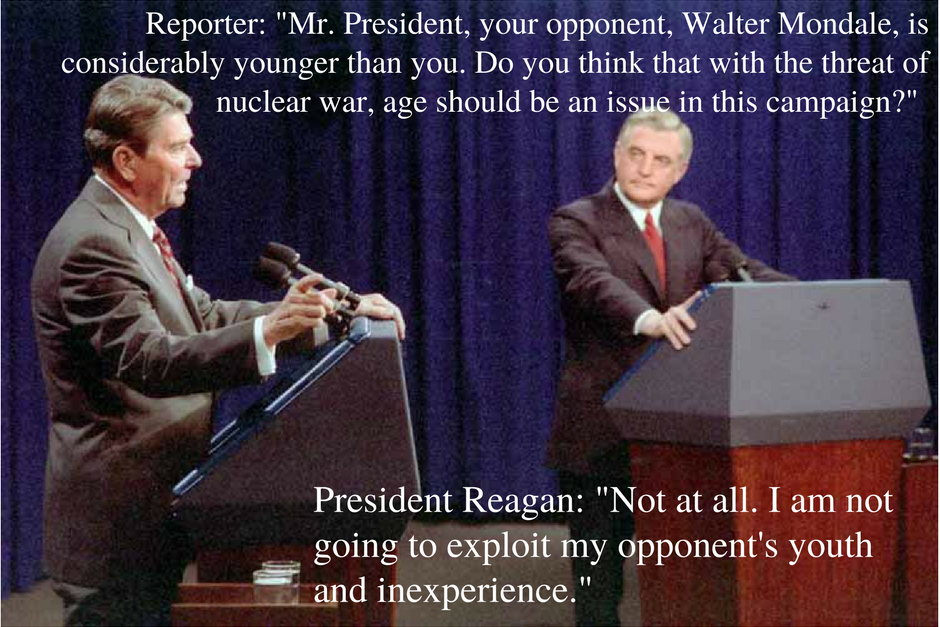

Figure \(\PageIndex{1}\) Reagan's Red Herring

Figure \(\PageIndex{1}\) Reagan's Red Herring

- Weak/False Analogy —An analogy is a brief comparison, usually to make writing more interesting and to connect with the reader. While writers often use analogies effectively to illustrate ideas, a bad analogy can be misleading and even inflammatory.

Example: “Taxes are like theft.” This statement makes a false analogy because taxes are legal and thus cannot logically be defined as, or even compared to, something illegal.

- Tu Quoque —Also known as an appeal to hypocrisy, this fallacy translates from the Latin as “you, too.” Known on grade school playgrounds around the world, this false argument distracts by turning around any critique on the one making the critique with the implication that the accuser should not have made the accusation in the first place because it reveals him as a hypocrite—even if the accusation or critique has validity.

Example 1: “Mom, Joey pushed me!” “Yeah, but Sally pushed me first!” Any sister who has ratted out a brother before knows she will have to deal with an immediate counter attack, claiming that she has perpetrated the same crime she has accused the brother of doing (and more than likely, she has done so). The brother hopes that the sister’s blatant hypocrisy will absolve him of his crime. Any veteran parent of siblings will know not to fall for this trick.

Example 2: Joe the Politician has been legitimately caught in a lie. Joe and his supporters try to deflect the damage by pointing out the times his opponents have been caught lying, too; this counter accusation implies that Joe’s lie should be excused because of the hypocrisy of those who found it and who dare to even talk about it. However, this counter accusation does not actually do anything logically to disprove or challenge the fact of Joe’s lie.

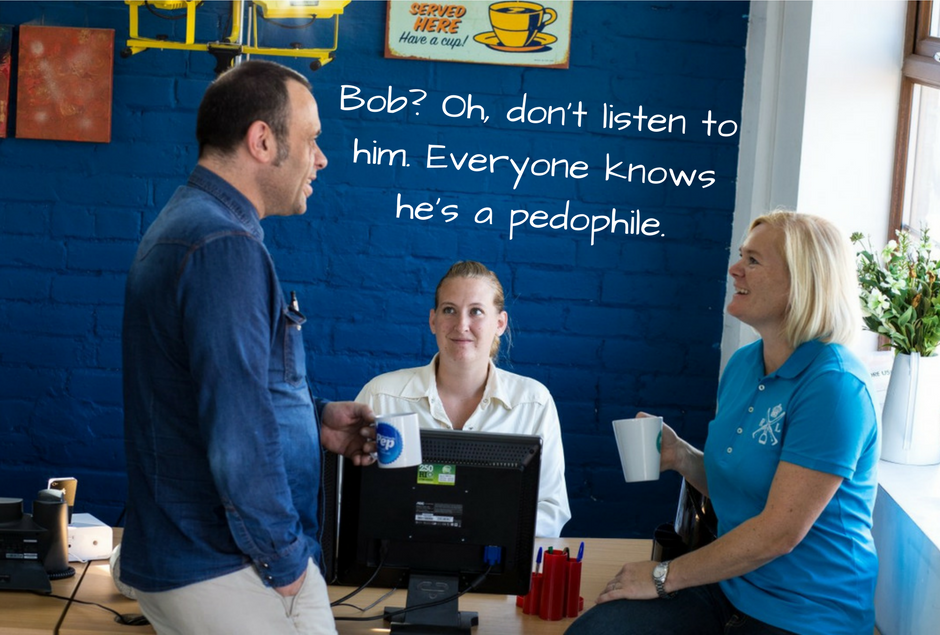

- Ad Hominem Attacks —The argumentum ad hominem is one of the most recognizable and irresistible of the red herring fallacies. Ad hominem attacks distract from an argument by focusing on the one making the argument, trying to damage his or her credibility. There are two main types of ad hominem attack: abuse and circumstance:

Ad hominem attacks of abuse are personal (often ruthlessly so), meant to insult and demean. Attacks of abuse distract the audience as well as the speaker or writer because he will believe it necessary to defend himself from the abuse rather than strengthen his argument.

Examples: These can include attacks on the body, intelligence, voice, dress, family, and personal choices and tastes.

In ad hominem attacks of circumstance, the debater implies that his opponent only makes an argument because of a personal connection to it instead of the quality and support of the argument itself, which should be considered independent of any personal connection.

Example: “You only support the Latino for this job because you’re a Latino.”

This statement fails the logic test because it only takes a personal characteristic into account–race–when making this claim. This claim does not consider two important issues: (1) People do not base every decision they make on their race, and (2) there may have been other perfectly logical reasons to support the Latino job applicant that had nothing to do with race.

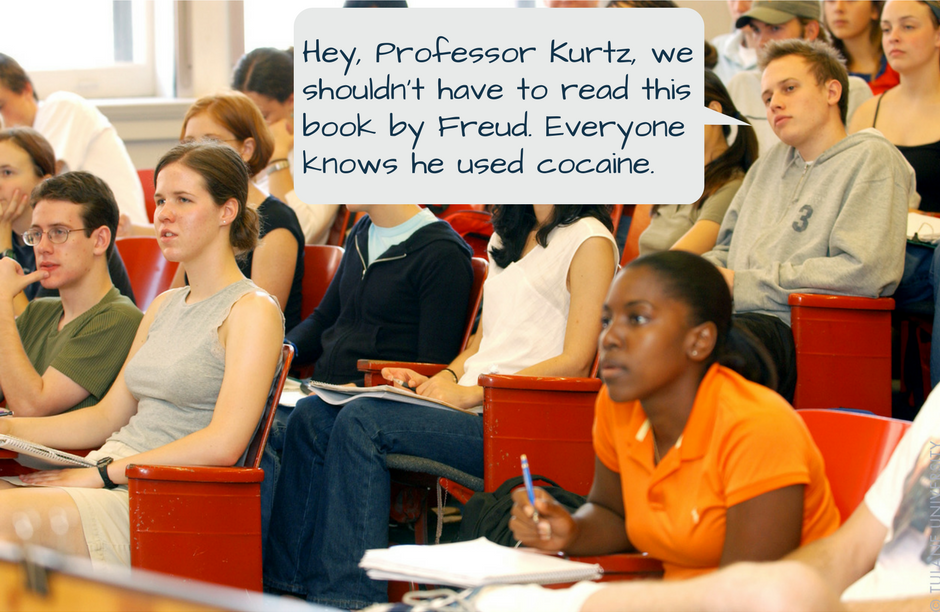

- Poisoning the Well —This is a type of ad hominem attack that attempts to damage the character of an opponent before that person even introduces an argument. Thus, by the time the argument is made, it often sounds weak and defensive, and the person making the argument may already be suspect in the minds of the audience.

Example: If a speaker calls out a woman for being overly emotional or hysterical, any heightened feeling—even a raised voice—may be attributed to her inability to control emotion. Furthermore, if that woman makes an argument, she can be ignored and her argument weakened because of the perception that it is rooted in emotion, not reason.

- Guilt by Association —This red herring fallacy works by associating the author of an argument with a group or belief so abhorrent and inflammatory in the minds of the audience that everyone, author and audience alike, is chasing squirrels up trees—that is, they are occupied by the tainted association to the reviled group—instead of dealing with the merits of the original argument.

Example: the argumentum ad Nazium, or playing the Hitler card. To counter an argument, either the arguer or a part of the argument itself is associated with Hitler or the Nazis. (“Vegetarianism is a healthy option for dieters.” “Never! You know, Hitler was a vegetarian!”) Because almost no one wants to be associated with fascists (or other similarly hated groups, like cannibals or terrorists), the author now faces the task of defending himself against the negative association instead of pursuing the argument. If, however, there are actual Nazis–or the equivalent of Nazis, such as white supremacists or other neofascists–making an argument based on fascist ideology, it is perfectly reasonable to criticize, oppose, and object to their extreme and hateful views.

Irrelevant Appeals —Unlike the rhetorical appeals, the irrelevant appeals are attempts to persuade the reader with ideas and information that are irrelevant to the issues or arguments at hand, or the appeals rest on faulty assumptions in the first place. The irrelevant appeals can look and feel like logical support, but they are either a mirage or a manipulation.

- Appeal to Emotion —manipulates the audience by playing too much on emotion instead of rational support. Using scare tactics is one type of appeal to emotion. Using pity to pressure someone into agreement is another example.

Example: Imagine a prosecuting attorney in a murder case performing closing arguments, trying to convict the defendant by playing on the emotions of the jury: “Look at that bloody knife! Look at that poor, battered victim and the cruelty of all those terrible stab wounds!” The jury may well be swayed by such a blatant appeal to emotion–pity, horror, disgust—but this appeal doesn’t actually provide any concrete proof for the defendant’s guilt. If the lawyer has built a logical case that rests on an abundance of factual data, then this appeal to emotion may be justified as a way to personalize that data for the jury. If, however, the lawyer only uses this appeal to emotion, the argument for guilt is flawed because the lawyer has tried to make up for a weak case by turning the jury members’ emotions into the main evidence for guilt.

- Appeal to Popularity —Also known as the bandwagon fallacy, the appeal to popularity implies that because many people believe or support something, it constitutes evidence for its validity. However, once we stop to think this idea through, we can easily remember popular ideas that were not at all good or justifiable: The majority does not always make the best choice.

Example: A good example here would be fashion trends. What is popular from one day to the next does not necessarily have anything to do with whether something logically is a good idea or has practical use.

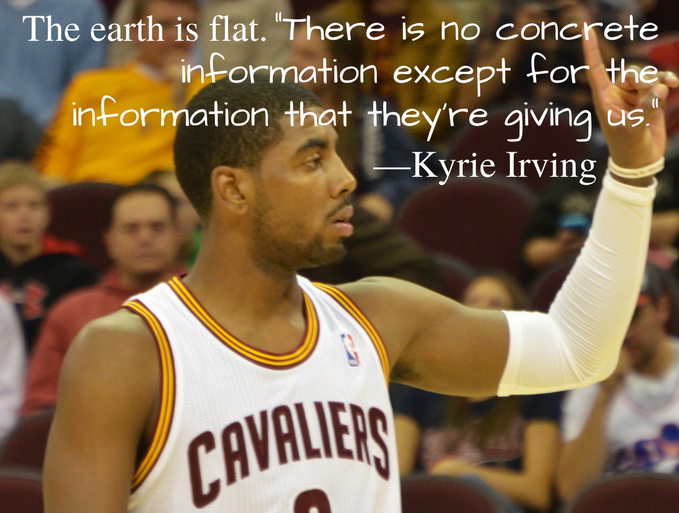

- Appeal to Incredulity —suggests that a lack of understanding is a valid excuse for rejecting an idea. Just because someone does not personally understand how something works does not mean that thing is false. A person does not need to understand how a car’s engine works to know that it does work, for instance. Often, in addition to rejecting the difficult idea, the arguer goes on to suggest that anyone who believes in the idea is foolish to do so.

Example: “It’s just common sense that the earth is flat because when I look at it, I can’t see any curve, not even when I’m in an airplane. I don’t need any scientist to tell me what I can clearly figure out with my own eyes.” This person has casually dismissed any scientific evidence against a flat earth as if it did not matter. Often those making an illogical appeal to incredulity will substitute what they think of as “common sense” for actual scientific evidence with the implication that they do not need any other basis for understanding. The problem is that many of the truths of our universe cannot be understood by common sense alone. Science provides the answers, often through complex mathematical and theoretical frameworks, but ignorance of the science is not a justifiable reason for dismissing it.

- Appeal to Nature —the assumption that what is natural is (1) inherently good and therefore (2) constitutes sufficient reason for its use or support. This is flawed because (1) how we determine what is natural can and does change, and (2) not everything that is natural is beneficial.

Example: “Vaccines are unnatural; thus, being vaccinated is more harmful than not being vaccinated.” A person making this statement has made an illogical appeal to nature. The fact that vaccines are a product of human engineering does not automatically mean they are harmful. If this person applied that logic to other cases, she would then have to reject, for instance, all medicines created in the lab rather than plucked from the earth.

- Appeal to Tradition/Antiquity —assumes that what is old or what “has always been done” is automatically good and beneficial. The objection, “But, we’ve always done it this way!” is fairly common, used when someone tries to justify or legitimize whatever “it” is by calling on tradition. The problems are these: (1) Most rudimentary history investigations usually prove that, in fact, “it” has not always been done that way; (2) tradition is not by itself a justification for the goodness or benefit of anything. Foot binding was a tradition at one point, but a logical argument for its benefit would strain credulity.

Example: “We should bar women from our club because that is how it has always been done.” The person making this argument needs to provide logical reasons women should not be included, not just rely on tradition.

- Appeal to Novelty —the mirror of the appeal to antiquity, suggesting that what is new is necessarily better.

Example: “Buy our new and improved product, and your life will forever be changed for the better!” Advertisers love employing the appeal to novelty to sell the public on the idea that because their product is new, it is better. Newness is no guarantee that something is good or of high quality.

- Appeal to Authority —Appealing to the ideas of someone who is a credentialed expert—or authority—on a subject can be a completely reasonable type of evidence. When one writes a research paper about chemistry, it is reasonable to use the works of credentialed chemists. However, the appeal to authority becomes a fallacy when misapplied. That same credentialed chemist would not be a logical authority to consult for information about medieval knights because authority in one area does not necessarily transfer to other areas. Another mistaken appeal to authority is to assume that because someone is powerful in some way that that power accords that person special knowledge or wisdom.

Example: Many societies throughout history have had hierarchical social and political structures, and those who happened to be in the top tier, like aristocrats and rulers, had authority over those below them. In fact, the term “nobility” in the west had embedded within it the notion that the aristocracy really were better—more ethical, more intelligent, more deserving of reward—than those lower on the social ladder. Careful study of the nobility shows, however, that some members were just as capable of immorality and stupidity as lower social groups.

- Appeal to Consequences/Force —the attempt to manipulate someone into agreement by either implicit or explicit threats of consequences or force (violence!).

Example: “Agree with me or you’ll be fired!” Holding something over another person’s head is not a reasonable way to support an argument. The arguer avoids giving any sort of logic or evidence in favor of a threat.

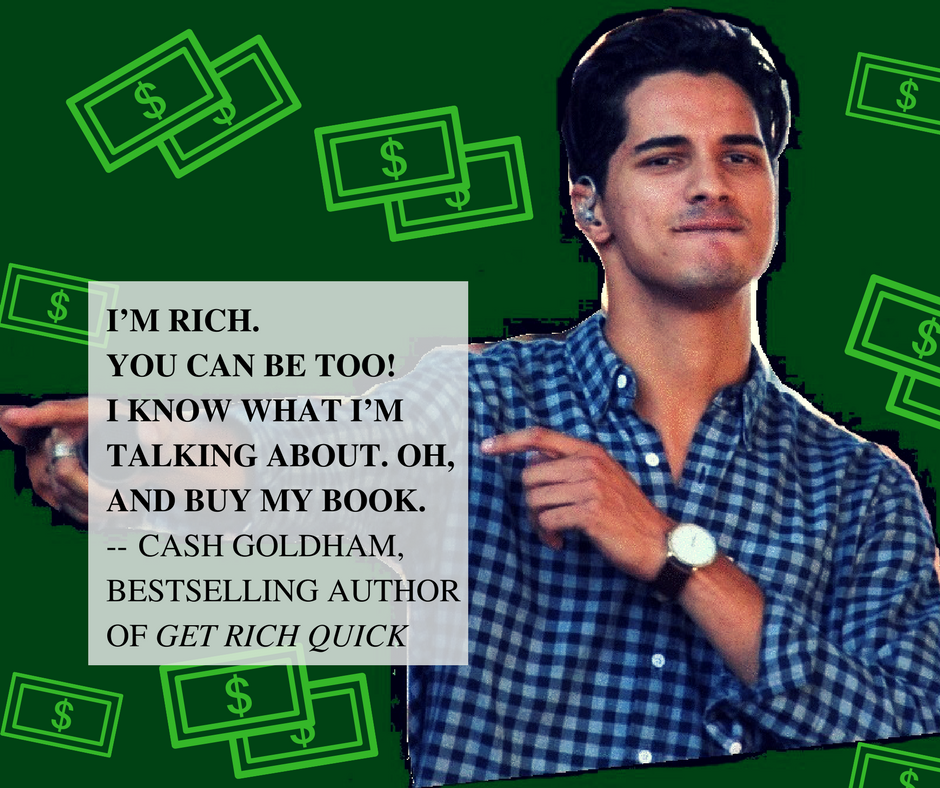

- Appeal to Wealth —the assumption that wealthy people have special knowledge or wisdom that derives from their economic position and that can then be applied to any area of knowledge.

Example: “Hi, I’m a famous actress, and while I’m not a qualified psychologist, read my new self-help book for how you, too, can avoid depression!” Fame and fortune alone do not turn someone into a qualified expert. Appealing to a person’s expertise solely based on wealth and position is thus logically flawed.

- Appeal to Poverty —the mirror of the above irrelevant appeal, that poor people have special knowledge or wisdom because of their adverse economic circumstances. This can work in another way: that poor people are particularly deficient in knowledge or wisdom because they are poor. However, neither assumption constitutes sound reasoning. The conditions of poverty are far too complex.

Example: “That man lives on unemployment benefits, so why would I care about his opinion on anything?” The arguer in this scenario unreasonably uses another’s poverty against him, by implying that a poor person would have worthless ideas. This implication has embedded within it the idea that someone is only poor because of some sort of personal lack–intelligence, morality, good sense, and so on. However, it is quite reasonable for a poor person to be intelligent, ethical, and wise. To assume otherwise is to risk making logical mistakes.

Fallacies of Presumption

To call someone presumptuous is to accuse that person of overreaching—making bold assertions without adequate reason or failing to follow the rules of behavior (but presuming it is okay to do so). The logical fallacy version of this involves making a case with inadequate or tainted evidence, or even no evidence whatsoever, or by having unjustified reasons for making the case in the first place.

Working with Flawed Evidence —These fallacies occur when an author uses evidence that has been compromised.

- Hasty Generalization —A hasty generalization derives its conclusion from too little information, evidence, or reason.

One type of hasty generalization is jumping to a conclusion from a small amount of evidence.

Example: Having one bad meal at a restaurant and then immediately concluding that all meals from that restaurant will be just as bad.

Another type of hasty generalization involves relying on anecdotal evidence for support. As human beings, we overestimate the power of personal experience and connections, so they can drown out scientific data that contradict an individual—or anecdotal—experience. Additionally, anecdotal evidence is persuasive because of the human desire for perfection. Perfection is a lofty—and mostly unreachable—goal, and when a product or a person or a program fails to live up to perfection, it becomes easier to dismiss—particularly when a personal story or two of imperfection is involved. Accurate information, however, comes from a much larger amount of data—analysis of hundreds or thousands or even millions of examples. Unfortunately, data can feel impersonal and, therefore, less convincing.

Example: “I love my new Bananatmlaptop. The product ratings for it are very high.” “Oh, no one should ever buy one of their computers! My brother had one, and it was full of glitches.” Basing a judgement or an argument on a personal story or two, as in this case, is not logical but can be incredibly persuasive. However, if 98% of Bananatmcomputers run perfectly well, and only 2% have glitches, it is illogical to use that 2% to write this product off as universally terrible.

In scholarship, hasty generalizations can happen when conclusions derive from an unrepresentative sample. Data coming from a group that fail to represent the group’s full complexity is unrepresentative, and any results drawn from that data will be flawed.

Example: If advances in cancer research were only, or mostly, tested on men, that would be unrepresentative of humanity because half of the human population—women—would not be represented. What if the cancer treatments affect women differently?

Another type of hasty generalization derived from poor research is the biased sample. This comes from a group that has a predisposed bias to the concepts being studied.

Example: If a psychologist were to study how high school students handled challenges to their religious views, it would be flawed to only study students at schools with a religious affiliation since most of those students may be predisposed toward a single type of religious view.

- S weeping Generalization —the inverse of the hasty generalization. Instead of making a conclusion from little evidence, the sweeping generalization applies a general rule to a specific situation without providing proper evidence, without demonstrating that the rule even applies, or without providing for exceptions. Stereotyping is one prominent type of sweeping generalization; a stereotype derives from general ideas about a group of people without accounting for exceptions or accuracy or that there is any sound reasoning behind the stereotype.

- Confirmation Bias —a pernicious fallacy that can trip even careful scholars. It occurs when the writer or researcher is so convinced by her point of view that she only seeks to confirm it and, thus, ignores any evidence that would challenge it. Choosing only data that support a preformed conclusion is called cherry picking and is a one-way ticket to skewed results. Related to this fallacy is another—disconfirmation bias—when the writer or researcher puts so much stock in her side of the argument that she does not apply equal critical evaluation to the arguments and evidence that support the other side. In other words, while too easily and uncritically accepting what supports her side, she is unreasonably critical of opposing arguments and evidence.

Example: In the later nineteenth century, when archaeology was a new and thrilling field of study, Heinrich Schliemann excavated the ancient city of Troy, made famous in Homer’s epic poem, The Iliad. In fact, Schliemann used The Iliad as a guide, so when he excavated, he looked to find structures (like walls) and situations (proof of battles) in the archaeological remains. While Schliemann’s work is still considered groundbreaking in many ways, his method was flawed. It allowed him to cherry pick his results and fit them to his expectations–i.e., that his results would fit the myth. When Schliemann sought to confirm story elements from The Iliad in the archaeological record, he risked misinterpreting his data. What if the data was telling a different story than that in The Iliad ? How could he know for sure until he put the book down and analyzed the archaeological evidence on its own merits? For more on Schliemann and his famous early excavations, see his Encyclopedia Britannicaentry(https://tinyurl.com/y9tk4vou), or look up “Heinrich Schliemann” in the Gale Virtual Reference Library database.

- No True Scotsman —a false claim to purity for something that is too complex for purity, like a group, an identity, or an organization. Those making claims to purity usually attempt to declare that anyone who does not fit their “pure” definition does not belong. For example, national identity is complicated and can mean something different to each person who claims that identity; therefore, it is too complex for a one-size-fits-all definition and for any one litmus test to prove that identity.

Examples: “No real Scot would put ice in his scotch!” “No real man would drink lite beer!” “No real feminist would vote Republican!” Each of these statements assumes that everyone has the same definition for the identities or groups discussed: Scots, men, and feminists. However, the members of each group are themselves diverse, so it is illogical to make such blanket declarations about them. It is actually quite reasonable for a Scottish person to like ice in her scotch and still claim a Scottish identity or for a man to drink lite beer without relinquishing his manhood or for a feminist to vote Republican while still working toward women’s rights.

Working with No Evidence —These fallacies occur when the evidence asserted turns out to be no evidence whatsoever.

- Burden of Proof —This logical fallacy, quite similar to the appeal to ignorance, occurs when the author forgets that she is the one responsible for supporting her arguments and, instead, shifts the burden of proof to the audience.

Example: “Larry stole my painting,” Edith cried. “Prove to me he didn’t!” No: The one making the claim must give reasons and evidence for that claim before anyone else is obligated to refute it. If Edith cannot give sound proof of Larry’s guilt, the argument should be rejected.

- Arguing from Silence or Ignorance —Like the burden of proof fallacy, this one occurs when the author, either implicitly or explicitly, uses a lack of evidence as a type of proof. This is the basis for most conspiracy theory nonsense, as if the lack of evidence is so hard to believe, the only reason to explain why it is missing is a cover up. Remember, it is the writer’s job to present positive proof (evidence that actually exists and can be literally seen) to support any argument made. If a writer cannot find evidence, he must admit that he may be wrong and then, find a new argument!

Example: “There is no proof that Joe the Politician conspired with the Canadians to rig the elections.” “A-ha! That there is nothing to find is proof that he did! He must have paid off everyone involved to bury the evidence.” Lack of proof cannot be–in and of itself–a type of proof because it has no substance; it is a nothing. Is it possible that proof may arise in the future? Yes, but until it does, the argument that Joe and the Canadians rigged an election is illegitimate. Is it possible that Joe both rigged the election and paid people off to hide it? Again, yes, but there are two problems with this reasoning: (1) Possibility, like absence of evidence, is not in itself a type of evidence, and (2) possibility does not equal probability. Just because something is possible does not mean it is probable, let alone likely or a sure thing. Those supporting conspiracy theories try to convince others that lack of proof is a type of proof and that a remote possibility is actually a surety. Both fail the logic test.

- Circular Reasoning —also known as begging the question, occurs when, instead of providing reasons for a claim, the arguer just restates the claim but in a different way. An author cannot sidestep reasons and proof for an argument by just repeating the claim over and over again.

Example: “The death penalty is sinful because it is wrong and immoral.” The conclusion (the death penalty is sinful) looks like it is supported by two premises (that it is wrong, that it is immoral). The problem is that the words “wrong” and “immoral” are too close in meaning to “sinful,” so they are not actual reasons; rather, they are just other ways to state the claim.

- Special Pleading —Anyone who makes a case based on special circumstances without actually providing any reasonable evidence for those circumstances is guilty of special pleading.

Example: “Is there any extra credit I can do to make up for my missing work?” Many students have asked this of their college professors. Embedded within the question is a logical fallacy, the insistence that the student asking it should get special treatment and be rewarded with extra credit even though he missed prior assignments. If the student has logical (and preferably documented) reasons for missing course work, then the fallacy of special pleading does not apply. Those expecting to be given special treatment without reasonable justification have committed the special pleading fallacy.

- Moving the Goalposts —happens when one keeps changing the rules of the game in mid-play without any reasonable justification.

Example: This fallacy occurs in Congress quite a lot, where the rules for a compromise are established in good faith, but one side or the other decides to change those rules at the last minute without good reason or evidence for doing so.

- Wishful Thinking —involves replacing actual evidence and reason with desire, i.e., desire for something to be true. Wanting an idea to be real or true, no matter how intensely, does not constitute rational support. This fallacy often occurs when closely-held ideas and beliefs are challenged, particularly if they are connected to family and identity or if they serve self interest.

Example: People do not like to see their personal heroes tarnished in any way. If a popular sports hero, e.g., is accused of a crime, many fans will refuse to believe it because they just don’t want to. This plays right into the wishful thinking fallacy.

Working with False Ideas about Evidence or Reasoning —These fallacies either (1) presume something is a reason for or evidence of something else when that connection has not been adequately or fairly established or (2) unfairly limit one’s choices of possible reasons.

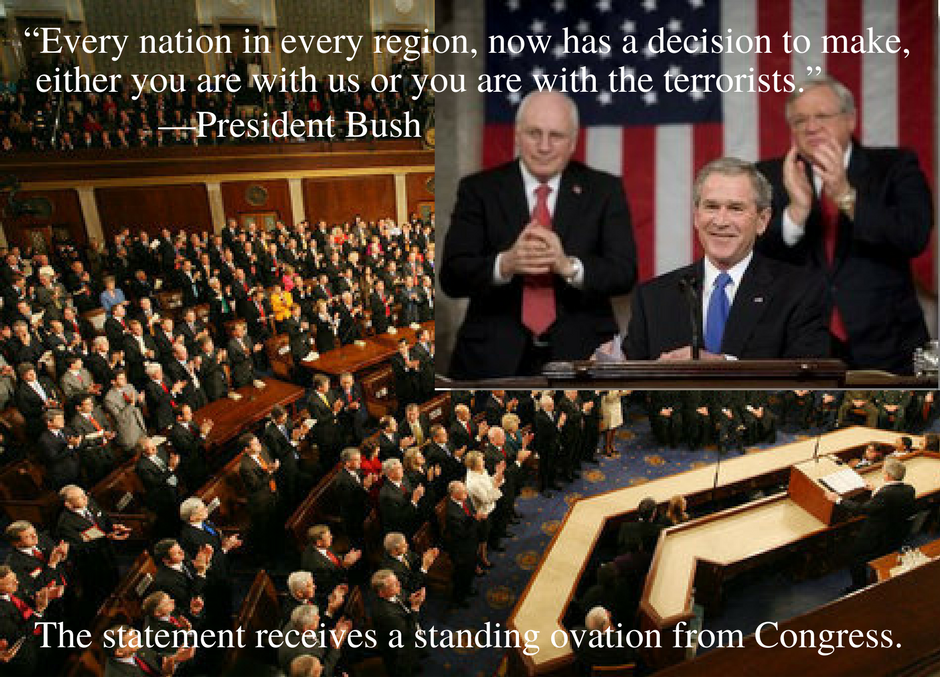

- False Dilemma/Dichotomy —occurs when one presents only two options in an argument when there are, in fact, many more options. Arguments have multiple sides, not just two, so when only two are presented, readers are forced to choose between them when they should be able to draw from a more complex range of options. Another way to talk about the false dichotomy is to call it reductionist because the arguer has reduced the options from many to only two.

Example: “So, are you a dog person or a cat person? Are you a Beatles person or a Rolling Stones person? You can be only one!” Both of these examples provide a false choice between two options when there are clearly others to choose from. One might also reasonably choose both or neither. When an arguer only provides two options, she tries to rig the response and to get the responder to only work within the severely limited framework provided. Life is more complicated than that, so it is unreasonable to limit choices to only two.

- Loaded Question —embeds a hidden premise in the question, so anyone who responds is forced to accept that premise. This puts the responder at an unfair disadvantage because he has to either answer the question and, by doing so, accept the premise, or challenge the question, which can look like he is ducking the issue.

Example: “So, when did you start practicing witchcraft?” The hidden premise here is that the responder is a witch, and any reply is an admittance to that as a fact. An open question, one that does not trick the responder into admitting the presumption of witchcraft, would be this: “Are you a witch?”

False Cause —asserts causes that are more assumptions than actual causes. There are three types of false cause fallacies:

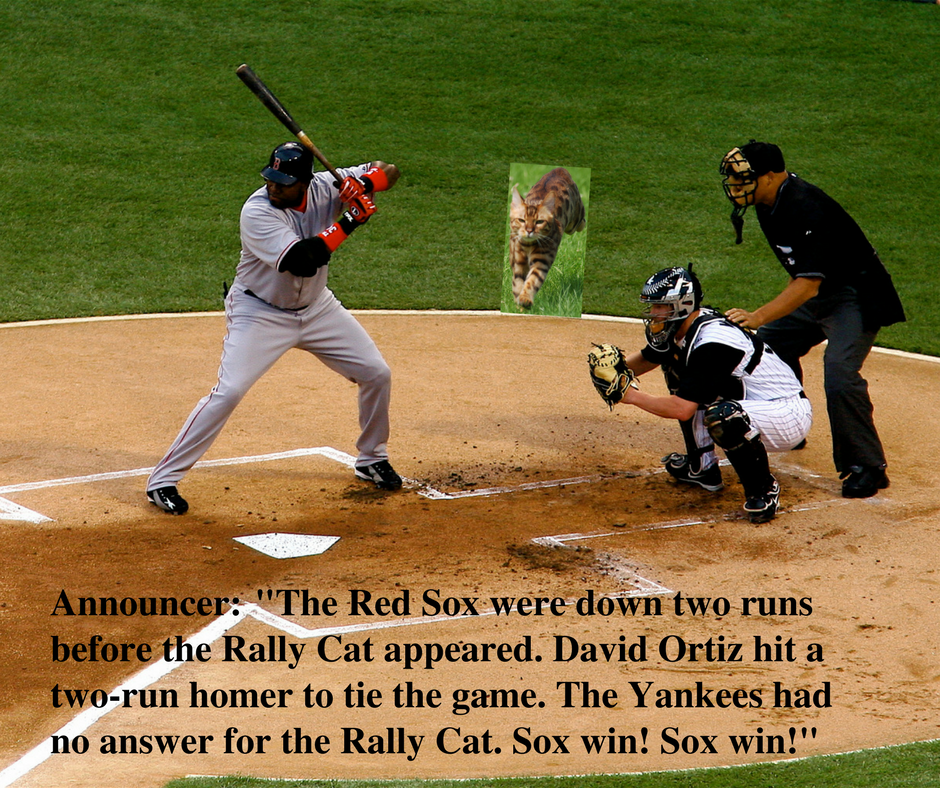

- Post hoc ergo propter hoc —In Latin, this phrase means “after this, therefore, because of this,” which asserts that when one thing happens before another thing, the first must have caused the second. This is a false assumption because, even if the two things are related to each other, they do not necessarily have a causal relationship.

Example: Superstitions draw power from this logical fallacy. If a black cat crosses Joe the Politician’s path, and the next day Joe loses the election, is he justified, logically, in blaming the cat? No. Just because the cat’s stroll happened before the election results does not mean the one caused the other.

- Slippery Slope —the cause/effect version of jumping to a conclusion. A slippery slope argument claims that the first link in a causal chain will inevitably end in the most disastrous result possible, thus working to scare the audience away from the initial idea altogether. Keep in mind, legitimate and logical causal chains can be argued: where one cause leads to a logical effect, which then leads to the next logical effect, which then leads to the next logical effect, and so on. Those using the slippery slope fallacy, however, do not bother to carefully establish a logical chain but rather skip right ahead to the worst possible conclusion.

Example: “Oh no, if I fail this test, my whole life is ruined!” This is a common fear among panicked students but is a prime example of the slippery slope. The student likely imagines this sort of logical chain: a failed test → failed class → getting behind in college → flunking out of college → all future job prospects falling through → total unemployment → abject poverty → becoming a pariah to family and friends → a thoroughly ruined life. The worry is that failing a test, should it even happen at all, will automatically result in the worst possible case: a totally failed life. However, when thought through more calmly and logically, hopefully, the student will realize that many mitigating factors lie between one failed test and total ruination and that the total ruination result is actually quite unlikely.

- Cum hoc ergo propter hoc —In Latin, this phrase translates to “with this, therefore, because of this,” which suggests that because two or more things happen at the same time they must be related. This, however, doesn’t account for other logical possibilities, including coincidence.

Example: “Gah! Why does the phone always ring as we sit down to dinner?” This question implies that those two events have something to do with each other when there are likely far more logical reasons that they do not.

Fallacies of Ambiguity

To be ambiguous is to be unclear; thus, fallacies of ambiguity are those that, intentionally or not, confuse the reader through lack of clarity. They create a fog that makes it difficult to see what the conclusion or the reasonable parts of an argument are, or the fog prevents a reasonable conclusion in the first place.

- Quoting out of Context —occurs when quoting someone without providing all the necessary information to understand the author’s meaning. Lack of context means that the original quote’s meaning can be obscured or manipulated to mean something the original author never intended. Usually that context comes from the original text the quote came from that the borrower has failed to include or deliberately excluded.

Example: Original statement: “You may hand write your assignments but only when instructed to in the assignment schedule.”

Quote used: “You may hand write your assignments.”

Clearly, the quoted part leaves out some crucial information, qualifying information that puts limits on the initial instruction. The scenario may be this: The original statement came from a professor’s syllabus, and the student quoted just the first part to an advisor, for instance, while trying to register a complaint over a bad grade for an assignment he hand wrote but wasn’t supposed to. When the student exclaims, “But my professor told me I could hand write my assignments!” he is guilty of muddying the truth by quoting out of context. He left out the part that told him to verify the assignment instructions to see if handwriting were allowable or not.

- Straw Man —Creating a straw man argument involves taking a potentially reasonable argument and misrepresenting it, usually through scare tactics or oversimplification, i.e., by creating an argument that sounds similar to the original but in reality is not. The straw man argument is designed to be outrageous and upsetting, and thus easier to defeat or get others to reject. Why try to dismantle and rebut a reasonable argument when one can just knock the head off the straw man substitute instead?

Example: “I think we need to get rid of standardized testing in junior high and high school, at least in its current form.” “That’s terrible! I can’t believe you don’t want any standards for students. You just want education to get even worse!” In this scenario, the second person has committed the straw man fallacy. She has distorted the first person’s argument–that standardized testing in its current form should be eliminated–and replaced it with a much more objectionable one–that all educational standards should be eliminated. Because there are more ways than just testing to monitor educational standards, the second person’s argument is a blatant misrepresentation and an over simplification.

- Equivocation —happens when an author uses terms that are abstract or complex—and, therefore, have multiple meanings or many layers to them—in an overly simple or misleading fashion or without bothering to define the particular use of that term.

Example: “I believe in freedom.” The problem with this statement is it assumes that everyone understands just exactly what the speaker means by freedom. Freedom from what? Freedom to do what? Freedom in a legal sense? In an intellectual sense? In a spiritual sense? Using a vague sense of a complex concept like freedom leads to the equivocation fallacy.

Fallacies of Inconsistency

This category of fallacies involves a lack of logical consistency within the parts of the argument itself or on the part of the speaker.

- Inconsistency Fallacy —is one of the more blatant fallacies because the speaker is usually quite up-front about his inconsistency. This fallacy involves making contradictory claims but attempting to offset the contradiction by framing one part as a disclaimer and, thus, implying that the disclaimer inoculates the one making it from any challenge.

Example 1: “I’m not a racist but….” If what follows is a racist statement, the one saying this is guilty of the inconsistency fallacy and of making a racist statement. Making a bold claim against racism is not a shield.

Example 2: “I can’t be sexist because I’m a woman.” The speaker, when making this kind of statement and others like it, assumes that she cannot logically be called out for making a sexist statement because she happens to be a member of a group (women) who are frequent victims of sexism. If the statements she makes can objectively be called sexist, then she is guilty of both sexism and the inconsistency fallacy.

- False Equivalence —asserts that two ideas or groups or items or experiences are of equal type, standing, and quality when they are not.

Example: The belief in intelligent design and the theory of evolution are often falsely equated. The logical problem lies not with desire to support one or the other idea but with the idea that these two concepts are the same type of concept. They are not. Intelligent design comes out of belief, mainly religious belief, while evolution is a scientific theory underpinned by factual data. Thus, these two concepts should not be blithely equated. Furthermore, because these two concepts are not the same type, they do not need to be in opposition. In fact, there are those who may well believe in intelligent design while also subscribing to the theory of evolution. In other words, their religious beliefs do not restrict an adherence to evolutionary theory. A religious belief is faith based and, thus, is not evaluated using the same principles as a scientific theory would be.

- False Balance —applies mainly to journalists who, because they wish to present an appearance of fairness, falsely claim that two opposing arguments are roughly equal to each other when one actually has much more weight to it—of both reasoning and evidence.

Example: The majority of scientists accept climate change as established by empirical evidence, while a scant few do not; putting one representative of each on a news program, however, implies that they represent an equal number of people, which is clearly false.

Key Takeaways: Logical Fallacies

- Both formal and informal fallacies are errors of reasoning, and if writers rely on such fallacies, even unintentionally, they undercut their arguments, particularly their crucial appeals to logos. For example, if someone defines a key term in an argument in an ambiguous way or if someone fails to provide credible evidence, or if someone tries to distract with irrelevant or inflammatory ideas, her arguments will appear logically weak to a critical audience.

- More than just logos is at stake, however. When listeners or readers spot questionable reasoning or unfair attempts at audience manipulation, they may conclude that an author’s ethics have become compromised. The credibility of the author (ethos) and perhaps the readers’ ability to connect with that writer on the level of shared values (pathos) may well be damaged.