4: Creating and Exploring New Worlds - Web 2.0, Information Literacy, and the Ways We Know

- Page ID

- 70129

Chapter 4. Creating and Exploring New Worlds: Web 2.0, Information Literacy, and the Ways We Know

Florida State University

This chapter—more of a story, perhaps, than a dialogue between two disciplines interested in writing, although informed by each—identifies the current moment of information literacy (IL) as an ecosystem requiring new ways of researching, including new means of determining credibility of sources. It begins by outlining three periods in the recent history of IL as experienced by the researcher: (1) the period when gatekeepers were available to help assure credibility of sources; (2) the period of online access to information held in brick and mortar libraries, with digitized information providing new ways of organizing information and thus new ways of seeing; and (3) the most recent period located in a wide ecology of interacting sources—academic; mainstream; and “alternative”—sources that include texts, data, and people inside the library, of course, but ranging far beyond it. In such an ecology, as we see in the information ecologies presented in both the Framework for IL (ACRL, 2015) and Rolf Norgaard’s chapter (Chapter 1, this collection), students trace some sources and actively identify and invite others: research, in other words, has become a variegated set of processes, including searching and confirming credibility, but including as well initiating contact with and interacting with sources. Given this new context for research, I also consider how we can introduce students to this new normal of researching and identify some tasks we might set for students so that they learn how to determine what’s credible and what’s not—in addition to considering how, if in the future students are not only knowledge-consumers but also knowledge-makers, we can support this development, too.

Research One: The Traditional Scene

In the fall of 2006, I spent the better part of a day in the Victoria and Albert Museum (V&A) in London, my purpose there to review some sources from the V&A special collection for an article I was writing (see Figure 4.1). To put it more simply, I was conducting a kind of humanities research in a very conventional way—identifying a purpose, tracing textual sources in a sanctioned library, drafting and revising. (Research in other fields takes various forms, of course, including field work and lab work.)

As I learned, the V&A library is very generous with its resources: it shares materials with anyone who can show a simple identity card. This sharing, however, comes with three very noteworthy stipulations. The first is that one can borrow materials only when the V&A is open, and they pretty much keep banker’s hours five days a week, so while access to materials is possible, it is only so within a limited number of days and hours—and this assumes one can travel to London. Second, the materials can be used only onsite; they can be copied on library-approved copying machines, but they cannot be checked out, even overnight. The third is that assuming a patron can get to the V&A at the appointed days and hours, accessing the materials requires an elaborated process. Each item requires a specific protocol, as the V&A (2015) explains:

Figure 4.1. Victoria and Albert Museum in London.

In the interests of security and conservation, materials from Special Collections are issued and consulted near the Invigilation Desk. A seat number will be allocated by the invigilator when the material ordered is ready for consultation. Readers are asked to sign for each item issued. Readers who find that works ordered are “Specials in General Stock” will be asked to collect them from the Invigilation Desk and consult them at the desks provided for the purpose.

In other words, the materials are there, but obtaining them isn’t an expeditious exercise, and using them requires a specific setting. As suggested, this is not an open-shelf library, where the patron might wander among the stacks and peruse the shelves, both practices that can lead to serendipitous discoveries: here the material in question is requested by the researcher and then retrieved by someone else. Some serendipity could occur as the researcher works with the V&A’s materials themselves, of course, but then that discovery could prompt another request protocol.

Nonetheless, it’s worth noting that the materials at the V&A offer an important value: they promise credibility. Of course, this library is a very specific one with a very specific mission; it is very unlike my academic library, which includes materials both credible (e.g., Scientific American) and incredible (e.g., The National Enquirer). If I am in doubt about the credibility of the materials, however, the V&A, like my FSU library, employs faculty and staff who can assist in reviewing materials and determining their credibility. What I thus need to do in such a scenario is identify and access the materials, ask for assistance if needed, and use the materials. Moreover, given its specific mission, a library like the V&A offers print collections that are relatively stable: their materials change with additions, but they don’t change very rapidly; their very permanence promotes a kind of confidence in the research process. Not least, such a research process is built on a tradition that also promotes confidence. Such a scene has supported research for several hundred years; to say that we have re-enacted and participated in such a scene in and of itself endows the researcher with a certain authority.

In sum, the V&A library provides one scene of research, a scene where materials are not always easily accessed, but where the materials themselves endow a kind of authority and whose credibility can be authenticated with the assistance of a library specialist.

Research Two: The Traditional Scene Digitized

When I returned home from London, I needed to do more research, and fortunately, the materials I needed were available in Florida State University’s Strozier Library; even more fortunately, they were available online. In other words, because the digital resources are available 24/7, I could access them even when the brick and mortar library was closed. In this case, assuming I have access—here defined quite differently, not as physical location, but rather as a set of factors all working together: a computing device, Internet access, and an FSU ID—I can read articles and ebooks, often the same research materials available in the brick and mortar library, and I can do so at any time in a 24-hour day. Moreover, because the electronic materials are located in a database, they come with affordances unavailable in print. For example, in accessing an issue of College Composition and Communication (CCC) through JSTOR, a database available through the FSU library that mimics the print resources in the library stacks, I can read the articles online; I can save them; and I can print them; I can export citations via email, BibTex, RefWorks, or Endnote. More important for situating an idea or author, there is a search engine inside both journal and complete database (e.g., CCC; JSTOR) that enables looking for authors, topics, and key terms: I can thus trace a given idea or author throughout a set of articles, and if the source is digitized and in the FSU library—two big conditions, admittedly—I can access it immediately. Other journals offer even more options. For example, someone researching the relationship between medical doctors and patients might consult the Journal of the American Medical Association (JAMA) and, if so, find an article published in 2005, available in FSU’s proxy for JAMA, and, again, read or download it. The reader can also immediately link to the articles that it cites in its references since most of them are in the database; the process of finding other sources is thus even easier than the one described above, and by engaging in this process, the reader can begin to create his or her own context for the reading of the article, although it’s worth noting that in terms of reading, we haven’t explored the impact on a reader of links supplied by others. In other words, there is likely a difference between reading a text that is unmarked and reading one with links provided by someone else, as I suggested in reviewing a digitized version of Hill’s Manual of Social and Business Forms:

An addition to the text is a set of links taking the reader to surprising places inside Hill’s—in one case, to an explanation of letters, in another to information about resorts. In that sense, reading this Hill’s is like reading a text with links functioning as annotations: it’s a text of someone else’s reading. Do we find the links others have planted for us an annoyance or an opportunity to read differently and more richly?

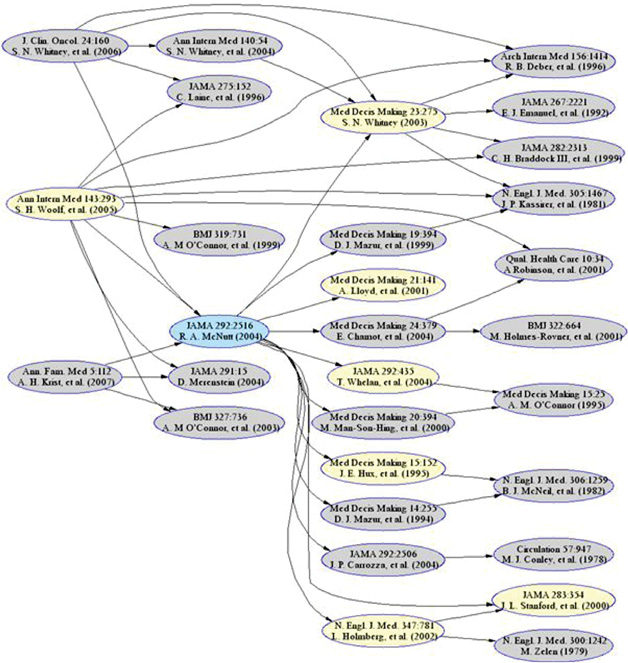

Figure 4.2. JAMA Citation Index.

JAMA also provides a citation index (see Figure 4.2) showing the number of times the article has been cited in other articles and a graph showing how often per year, and it provides links to most of those articles as well.

In this Janus-like way, JAMA provides access to both the research the article draws on and the research to which it contributes: the article thus literally appears not as a stand-alone piece of research, but as one contribution to a larger set of research questions. Moreover, for 25 medical topics, JAMA includes videos of authors discussing the research. And not least, in the FSU online library, there is information available in independent databases, those not linked to journals, including newspapers from around the world, unpublished dissertations, and the like. The resource bank of the online library is thus very full.

As useful as the online library is, however, it’s not without disadvantages to the researcher. For one, the stacks and open shelves of the FSU brick and mortar library have been replaced by links created by others: as indicated above, those may or may not reflect the interests of the researcher, and in any event, online texts preclude the kind of serendipitous self-motivated browsing supported by brick and mortar libraries with open shelves, although it’s fair to note that the online files can promote a modified electronic bread crumbing that may be a kind of digital equivalent. But not all materials are digitized: many articles are not, and most books, at least for the moment, are not; the resources are thus simultaneously fuller and diminished. And for yet another concern, the life span of electronic materials in any given library is not assured: subscriptions to e-materials can change. Print, the library owns; electronic, it rents. If a journal’s price goes up or the library’s budget goes down (or both), the library may be forced to stop the rental. For yet another, as with print, formats that have been very useful may disappear: four years ago, JAMA offered a citation map (see Figure 4.3) for each of its articles, a very useful graphical representation visualizing an article’s influence. That affordance is now gone.

Figure 4.3. JAMA Citation Map

And for one last, the digitized materials themselves are often selectively digitized and thus are incomplete. Including only the “important” texts, they exclude materials, like advertisements, that were a part of each journal issue and that at the least provide information about context. But such excluded materials can also prompt or locate research projects: without such information, for example, it’s not only impossible to complete a project tracing advertisements for textbooks during the advent of writing process in the 1970s and 1980s, but likewise impossible to see how publishers translated and marketed scholars’ research back to the field, and impossible as well for any reader of the digitized journal to develop a fuller sense of the moment’s zeitgeist.

In sum, this scene of research is both richer and poorer than the traditional scene; it offers materials 24/7, and through its database representations, new ways of contextualizing materials—as long as it can offer them.

A Research Ecology

In terms of research, what we see in academic libraries is by definition limited in other ways as well, principally because libraries stock publications: they are not sites of research themselves, but rather places we go to consult research materials, including databases, primary texts, rare books, journal runs, newspapers, and monographs. Put another way, there’s research and there’s publication: research materials are available in libraries, but research itself takes place outside them in many sites—laboratories, field sites (from the Arctic to the neighborhood cemetery), community centers, classrooms, and so on. Historically, research has been reported in many venues, some of them the long form, peer-reviewed journals and books characteristic of traditional library holdings, but also in informal texts—in letters predating journals (Bazerman, 2000); in diaries; in logs; in newspapers and magazines. Such sites of research-making and distribution have always existed, but are now, with the affordances of the Internet, more visible, inclusive, and interactive. It’s commonplace now for researchers to share raw data and early findings in multiple venues ranging from scholarly websites to personal or professional blogs, personally hosted websites, and other social media outlets. Florida State University’s Rhetoric and Composition program, for example, hosts a Digital Postcard Archive (fsucardarchive.org/), and two graduate students in our program have created the Museum of Everyday Writing (https://museumofeverydaywriting.omeka.net/), which they personally host on Omeka, and which links to Facebook and Twitter. Likewise, I knew about Henry Jenkins’ theory of convergence culture over a year before his book on the topic was released because I’d been reading his blog. Of course, given these sources, a researcher needs to determine how credible the information is.

To help students explore this issue, which the Association of College and Research Libraries’ (ACRL, 2015) threshold concept “Research as Inquiry’ articulates, I have often assigned a “map of reading and researching” task: each student is to pose a question and trace online where the question takes him or her. As we can see from the map composed by Liane Robertson in Figure 4.4, a question about the impact of personal genetic testing leads to a robust ecology of sources, including academic sources like the New England Journal of Medicine; institutional blogs like Wired Science; newspapers like the Los Angeles Times online; and personal blogs like The Medical Quack. These resources are not all alike nor equivalent in credibility; sorting through them is one research task, and a very large part of that task entails determining the credibility of both claims and evidence that are displayed. Later versions of this assignment have asked students to research in another way: by writing to a source to obtain information that isn’t yet published, a task I have taken up myself. When I read about research on contextualized pedagogical practice and its role in supporting students in science, I emailed Steve Rissing, the researcher quoted in the Inside Higher Ed story, and he replied, helpfully, within a day. Another option in researching, in other words, is to contact a researcher or informant, and with electronic communication, it’s never been easier.

Figure 4.4. Circulation map.

In this new ecosystem, establishing credibility of sources is a larger challenge, but there are frameworks available to help. The ACRL, for example, includes this kind of task in its threshold concept Authority Is Constructed and Contextual. Likewise, building on the thinking about IL created by the National Forum on Information Literacy, the Association of American Colleges and Universities (AAC&U) has issued a definition of IL, and a rubric to match, entirely congruent with the ACRL’s approach. The AAC&U definition (2009) is fairly straightforward: “The ability to know when there is a need for information, to be able to identify, locate, evaluate, and effectively and responsibly use and share that information for the problem at hand.” And as operationalized in the AAC&U VALUE (Valid Assessment of Learning in Undergraduate Education) scoring guides, IL includes five components or dimensions expanding the definition: (1) Determine the extent of information needed; (2) Access the needed information; (3) Evaluate the information and its sources critically; (4) Use information effectively to accomplish a specific purpose; and (5) Access and use information ethically and legally. Although this heuristic for the activities required in research is useful, it doesn’t speak very specifically to the issue of credibility of sources, which is always at play, and never more so than in the current research ecology with its mix of sources and materials. Indeed, speaking to this research ecology, the ACRL (2015) observes that students have a new role to play in it, one involving “a greater role and responsibility in creating new knowledge, in understanding the contours and the changing dynamics of the world of information, and in using information, data, and scholarship ethically.”

Exploring Credibility

When considering the credibility of sources, researchers find four questions in particular helpful:

1.What sources did you find?

2.How credible are they?

3.How do you know?

4.And what will you do with them?

In thinking about credibility—which we can define as the accuracy or trustworthiness of a source—the key question may be “How do you know,” a question that historian Sam Wineberg (1991) can help address. Wineberg’s particular interest is in how students, in both high school and college, understand the making of history, which he locates in three practices useful in many fields. First is corroboration: “Whenever possible, check important details against each other before accepting them as plausible or likely” (p. 77), a standard that is very like the philosopher Walter Fisher’s (1995) “fidelity,” that is, looking for consonance between the new information and what we know to be accurate. Second is sourcing: “When evaluating historical documents, look first to the source or attribution of the document” (p. 79), a practice of consulting attributions that is just as important for scientists studying global warming and sociologists examining police arrest records as it is for historians. Moreover, Wineberg has also found the sequence of checking attribution important in evaluating credibility: historians predictably read attributions before reading a text whereas students, if they check for attributions at all, do so at the conclusion of the reading. Put another way, historians rely on the attribution to contextualize their reading, while students barely attend to it if they do attend to it at all. Third is contextualization: “When trying to reconstruct historical events, pay close attention to when they happened and where they took place” (p. 80). Here Wineberg is, in part, emphasizing the particulars of any given case, and recommending that in researching we attend to those, not to some preconceived idea that we brought to the text with us.

Equally useful is working with Wineberg’s practices in the context of case studies: using these questions together with case studies can help students (and other researchers) learn to use the questions as a heuristic to help decide the credibility of sources and identify which sources to use and how—tasks that the Framework for IL addresses in two threshold concepts, Authority is Constructed and Contextual and Searching as Strategic Exploration. Here I highlight two case studies: one in which students compare kinds of encyclopedias and contribute to one of them, and a second focused on some thought experiments raising epistemological questions related to credibility.

A first case study focuses on an analysis of an encyclopedia entry and a Wikipedia entry: as defined in the assignment, this comparison provides “an opportunity to consider how a given term is defined in two spaces purporting to provide information of the same quality”; the task is “to help us understand how they are alike and different and what one might do in creating a Wikipedia entry.” A simple comparison taps what we all suspect: a conventional encyclopedia, written by experts, presents an authorized synopsis on multiple topics, whereas Wikipedia shares information identified by several people, none of whom may bring any credentialed expertise to the topic. But this comparison isn’t an evaluation. It’s not that one of these is credible and one is not: each has different virtues, as students discover. An encyclopedia may be credible, but its entries are usually short, including very few references; it’s largely a verbal text; and it could be outdated. Wikipedia typically includes longer entries (often longer by a factor of 3) and includes links to other sources so that more exploration is easily possible, and its entries are often timely—assuming that they are not removed. But are the entries credible? In Wineberg’s terms, can we corroborate their claims? What do their attributions tell us?

Often students arrive at the same conclusion as Clay Shirkey (2009) in Here Comes Everybody:

Because Wikipedia is a process, not a product, it replaces guarantees offered by institutions with probabilities supported by process: if enough people care enough about an article to read it, then enough people will care enough to improve it, and over time this will lead to a large enough body of good enough work to begin to take both availability and quality of articles for granted, and to integrate Wikipedia into daily use by millions. (p. 140)

This latter claim is untested, of course, and can lead to discussions about the value, or not, of peer review: what is the relationship between a scholarly process of peer review and a Wikipedian crowdsourcing, and why is such a question important? Likewise, Shirkey’s claim is easier to consider when someone has experience in the process, that is, if students are asked not only to compare Wikipedia with another like text, but also to contribute to it themselves, either by adding to or modifying an existing entry or by beginning a new one. What students learn is twofold, about composing, of course, and a very different composing than they are accustomed to, but also about the making of knowledge—about, for example, how a claim that seems neutral to them is deleted as biased by one of Wikipedia’s editors or about how they too have to provide a credible, “neutral” source in order for a claim to be published on the site. In other words, asking students to compare different kinds of encylclopedias and to contribute to one of them helps them understand firsthand the processes of sourcing and of establishing credibility. And in terms of applying this assignment to their own research, students find that there are no easy answers to Weinberg’s questions and that one encyclopedia, whether a traditional encyclopedia or Wikipedia, isn’t inherently better than the next. They also learn that in conducting their own research, it might be useful to consult both as starting places, to corroborate them against each other, and to explore the resources identified in each.

Thought Experiments as Case Studies

Other kinds of case studies, which I have used with students and in faculty workshops, raise other kinds of questions, especially about the relationship of credibility and epistemology. To introduce this issue, I call on topical issues from a variety of fields. For example, we might consider issues raised by a movie. Several years ago, the movie Bright Star portrayed the life of John Keats: is it an accurate portrayal? Is it a good movie? These different questions, both related to the ACRL (2015) threshold concept Research as Inquiry, call for different approaches. To explore the first, we might consult Keats’ poetry and his personal writings; we might consult accounts of Keats provided by colleagues and friends; we might consult histories of the period. To explore the second, its value as a movie, we might view movies that have received awards, especially other biographical movies, like Amadeus and The Imitation Game. And more philosophically, we might consider the relationship between accuracy and value, especially in the context of adaptation studies, which take as their focus questions about the relationship between a print literary text, like Pride and Prejudice, and its movie version(s). A single movie and two related questions, as a thought experiment, pointing us in very different directions, helps demonstrate how we know what (we think) we know, and in Wineberg’s terms, helps us consider how we might (1) corroborate; (2) authenticate in terms of attribution; and (3) employ the specifics of the movie in the context of historical and literary records.

A second thought experiment is less canonical: it focuses on the website “Patients like Me” (http://www.patientslikeme.com/). Late in the 1990s, James Heywood’s brother Stephen was diagnosed with ALS (Lou Gehrig’s disease); frustrated by his inability to be helpful, Heywood collaborated with two friends to create the site, a

free online community for people with life-changing diseases, including ALS, Multiple Sclerosis, Parkinson’s disease, HIV/AIDS, Mood Disorders, Fibromyalgia and orphan diseases (such as Devic’s Neuromyelitis Optica, Progressive Supranuclear Palsy and Multiple System Atrophy). Our mission is to improve the lives of patients through new knowledge derived from their shared real-world experiences and outcomes. To do so, we give our members easy-to-use, clinically validated outcome management tools so they can share all of their disease-related medical information. Our website is also designed to foster social interaction for patients to share personal experiences and provide one another with support. The result is a patient-centered platform that improves medical care and accelerates the research process by measuring the value of treatments and interventions in the real world. (2015)

In other words, this is a site that for the first time in history compiles patients’ accounting of their own diseases; that’s impressive. But is the information on it credible? Again, ACRL threshold concepts are useful here, especially Authority Is Constructed and Contextual and Searching as Strategic Exploration. Speaking to the first threshold concept, for instance, one ALS patient claims to have had ALS for 21 years: given the disease’s typical trajectory—most patients die within five years—he is a very unusual person. Here, we might consider the value of self-reported data, both on this site and in other, more conventional studies, like those that informed early accounts of composing processes. In addition, we might consider what we learn and how credible the aggregated information based on such data is. PatientsLikeMe offers considerable data; each disease community page, for example, includes statistics speaking to how many members of the site have the disease, how recently profiles have been updated, and how many new patients with the disease have joined, as well as a bar graph showing the age range of patients and a pie chart showing the percentage of patients reporting gender. Patients themselves provide considerable information, including treatment types and their efficacy, which is then compiled into treatment reports with treatment descriptions and efficacies, numbers of patients who use the treatment and for what purpose, reported side effects of use and so on. Some think that the information is credible: as Heywood (2009) explains, it’s shared with pharmaceutical companies (which is how the site is financially viable): “We are a privately funded company that aggregates our members’ health information to do comparative analysis and we sell that information to partners within the industry (e.g., pharmaceutical, insurance companies, medical device companies, etc.)” (p. 1). But do we find this information credible? In Wineberg’s terms, how might we corroborate these data?

Not least is the thought experiment regarding global warming, an exercise that has changed over time. In the 1990s, the question was whether the planet was experiencing the beginnings of global warming and how we would assess that. Today, the question has shifted to how quickly global warming is affecting the earth. Is a massive flood just experienced in India a sign of or an index to global warming? In the millions of years of earth-time, haven’t we seen global warming before? What are the effects of global warming, and what do they mean for public policy? And a related question that seems to be asked daily in all parts of the world: is our current weather normal? What is normal, and normal for what period of time—the last 10, 100, 1,000, or million years? What is current—this hour, this day, this week, this month? What is weather and how is it related to climate? Would we create our own records, consult back issues of The Farmer’s Almanac, examine diaries from centuries ago, log onto the records available on weather.com or accuweather or wunderground, or would we prefer data accumulated by the U.S. government? Would we include some mix of these data? Many questions like these are taken up by citizen scientists who are guided by rudimentary scientific protocols, as they have historically: Charles Darwin, for example, relied on 19th century homemakers in the U.S. to collect data for him. More generally, however, examining such protocols provides another window into how credibility is established, a window that seems increasingly wide given the availability of raw data and the role of interested laypeople in gathering them. Thus, Wineberg’s questions are helpful here as well, but they also prompt new ways of thinking, too. Given that much of history isn’t recorded, corroboration will probably need to include multiple kinds of materials, person-made and nature-recorded. What signs in nature might help us—rings on trees, for example? Given that attribution is important, what is the relative value to this project of a 17th century diary, a weather.com report, and U.S. government data? And given Wineberg’s interest in specifics, what are the signs of warming that we may have missed? What are signs that we may have mis-interpreted?

Conclusion

As we see through the concept of a research ecosystem, establishing credibility is increasingly difficult. In a very short period of time–less than the lifetime of many current academics—we have gone from a formalized IL system with human interpreters to an ecology constituted of the valuable and the incredible—facts, data, personal narrative, rumors, information, and misinformation, all inhabiting the same sphere, each info bit circulating as though it carried the same value as all the others, each info bit connected to other info bits and also disconnected from others in a seemingly random way. The good news, of course, is that more information is available: the more challenging, that we are all called on to make more sense of that information, to decide what’s credible, how it’s credible, and how we know that, a task that—given the thought experiments closing this chapter—is new not only for students, but for most of us.

One way to begin taking up this challenge is through the use of case studies, which raise very different kinds of questions and which are put into dialogue with Sam Wineberg’s (1991) schema for establishing credibility. Testing claims and evidence—that is, establishing credibility—isn’t easy, but with the lenses of corroboration, attribution, and specifics, it is more likely.

Note

1.As explained by AAC&U, the VALUE project is “a campus-based assessment initiative sponsored by AAC&U as part of its LEAP initiative. VALUE provides needed tools to assess students’ own authentic work, produced across their diverse learning pathways and institutions, to determine whether and how well they are progressing toward graduation-level achievement in learning outcomes that both employers and faculty consider essential. VALUE builds on a philosophy of learning assessment that privileges multiple expert judgments and shared understanding of the quality of student work through the curriculum, cocurriculum, and beyond over reliance on standardized tests administered to samples of students disconnected from an intentional course of study.”

References

American Council of Research Libraries (ACRL). (2015). Framework for information literacy for higher education. Retrieved from http://www.ala.org/acrl/standards/il...k#introduction

Association of American Colleges and Universities. (2009). VALUE Rubrics. Retrieved from https://www.aacu.org/value/rubrics

Bazerman, C. (2000). Letters and the social grounding of differentiated genres. In D. Barton & N. Hall (Eds.), Letter writing as a social practice (pp. 15-31). London: John Benjamins.

Fisher, W., & Goodman, R.F. (Eds.). (1995). Rethinking knowledge: Reflections across the disciplines. New York: State University of New York Press.

Heywood, J. (2009). Testimony before the National Committee on Vital and Health Statistics Subcommittee on Privacy, Confidentiality and Security. Retrieved from http://www.ncvhs.hhs.gov/wp-content/.../090520p04.pdf

Robertson, L. (2008). Circulation Map. Digital Revolution and Convergence Culture.

Shirkey, C. (2009). Here comes everybody: The power of organizing without organizations. New York: Penguin Books.

Victoria and Albert Museum. (2007). National Art Library Book Collections. Retrieved from http://www.vam.ac.uk/content/articles/n/national-art-library-book-collections/

Wineberg, S. (1991). Historical problem solving: A study of the cognitive processes used in the evaluation of documentary and pictorial evidence. Journal of Educational Psychology, 83(1), 73-87.

Yancey, K. B. (Forthcoming 2016). Print, digital, and the liminal counterpart (in-between): The lessons of Hill’s Manual of Social and Business Forms for rhetorical delivery.” Enculturation.