2.11: Proofs and the Eight Valid Forms of Inference

- Page ID

- 27929

Although truth tables are our only formal method of deciding whether an argument is valid or invalid in propositional logic, there is another formal method of proving that an argument is valid: the method of proof. Although you cannot construct a proof to show that an argument is invalid, you can construct proofs to show that an argument is valid. The reason proofs are helpful, is that they allow us to show that certain arguments are valid much more efficiently than do truth tables. For example, consider the following argument:

- (R v S) ⊃ (T ⊃ K)

- ~K

- R v S /∴ ~T

(Note: in this section I will be writing the conclusion of the argument to the right of the last premise—in this case premise As before, the conclusion we are trying to derive is denoted by the “therefore” sign, “∴”.) We could attempt to prove this argument is valid with a truth table, but the truth table would be 16 rows long because there are four different atomic propositions that occur in this argument, R, S, T, and K. If there were 5 or 6 different atomic propositions, the truth table would be 32 or 64 lines long! However, as we will soon see, we could also prove this argument is valid with only two additional lines. That seems a much more efficient way of establishing that this argument is valid. We will do this a little later—after we have introduced the 8 valid forms of inference that you will need in order to do proofs. Each line of the proof will be justified by citing one of these rules, with the last line of the proof being the conclusion that we are trying to ultimately establish. I will introduce the 8 valid forms of inference in groups, starting with the rules that utilize the horseshoe and negation.

The first of the 8 forms of inference is “modus ponens” which is Latin for “way that affirms.” Modus ponens has the following form:

- p ⊃ q

- p

- ∴ q

What this form says, in words, is that if we have asserted a conditional statement (p ⊃ q) and we have also asserted the antecedent of that conditional statement (p), then we are entitled to infer the consequent of that conditional statement (q). For example, if I asserted the conditional, “if it is raining, then the ground is wet” and I also asserted “it is raining” (the antecedent of that conditional) then I (or anyone else, for that matter) am entitled to assert the consequent of the conditional, “the ground is wet.”

As with any valid forms of inference in this section, we can prove that modus ponens is valid by constructing a truth table. As you see from the truth table below, this argument form passes the truth table test of validity (since there is no row of the truth table on which the premises are all true and yet the conclusion is false).

| p | q | p ⊃ q | p | q |

|---|---|---|---|---|

| T | T | T | T | T |

| T | F | F | T | F |

| F | T | T | F | T |

| F | F | T | F | F |

Thus, any argument that has this same form is valid. For example, the following argument also has this same form (modus ponens):

- (A ⋅ B) ⊃ C

- (A ⋅ B)

- ∴ C

In this argument we can assert C according to the rule, modus ponens. This is so even though the antecedent of the conditional is itself complex (i.e., it is a conjunction). That doesn’t matter. The first premise is still a conditional statement (since the horseshoe is the main operator) and the second premise is the antecedent of that conditional statement. The rule modus ponens says that if we have that much, we are entitled to infer the consequent of the conditional.

We can actually use modus ponens in the first argument of this section:

- (R v S) ⊃ (T ⊃ K)

- ~K

- R v S /∴ ~T

- T ⊃ K Modus ponens, lines 1, 3

What I have done here is I have written the valid form of inference (or rule) that justifies the line I am deriving, as well as the lines to which that rule applies, to the right of the new line of the proof that I am deriving. Here I have derived “T ⊃ K” from lines 1 and 3 of the argument by modus ponens. Notice that line 1 is a conditional statement and line 3 is the antecedent of that conditional statement. This proof isn’t finished yet, since we have not yet derived the conclusion we are trying to derive, namely, “~T.” We need a different rule to derive that, which we will introduce next.

The next form of inference is called “modus tollens,” which is Latin for “the way that denies.” Modus tollens has the following form:

- p ⊃ q

- ~q

- ∴ ~p

What this form says, in words, is that if we have asserted a conditional statement (p ⊃ q) and we have also asserted the negated consequent of that conditional (~q), then we are entitled to infer the negated antecedent of that conditional statement (~p). For example, if I asserted the conditional, “if it is raining, then the ground is wet” and I also asserted “the ground is not wet” (the negated consequent of that conditional) then I am entitled to assert the negated antecedent of the conditional, “it is not raining.” It is important to see that any argument that has this same form is a valid argument. For example, the following argument is also an argument with this same form:

- C ⊃ (E v F)

- ~(E v F)

- ∴ ~C

In this argument we can assert ~C according to the rule, modus tollens. This is so even though the consequent of the conditional is itself complex (i.e., it is a disjunction). That doesn’t matter. The first premise is still a conditional statement (since the horseshoe is the main operator) and the second premise is the negated consequent of that conditional statement. The rule modus tollens says that if we have that much, we are entitled to infer the negated antecedent of the conditional.

We can use modus tollens to complete the proof we started above:

- (R v S) ⊃ (T ⊃ K)

- ~K

- R v S /∴ ~T

- T ⊃ K Modus ponens, lines 1, 3

- 5. ~T Modus tollens, lines 2, 4

Notice that the last line of the proof is the conclusion that we are supposed to derive and that each statement that I have derived (i.e., lines 4 and 5) has a rule to the right. That rule cited is the rule that justifies the statement that is being derived and the lines cited are the previous lines of the proof where we can see that the rule applies. This is what is called a proof. A proof is a series of statements, starting with the premises and ending with the conclusion, where each additional statement after the premises is derived from some previous line(s) of the proof using one of the valid forms of inference. We will practice this some more in the exercise at the end of this section.

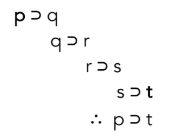

The next form of inference is called “hypothetical syllogism.” This is what ancient philosophers called “the chain argument” and it should be obvious why in a moment. Here is the form of the rule:

- p ⊃ q

- q ⊃ r

- ∴ p ⊃ r

As you can see, the conclusion of this argument links p and r together in a conditional statement. We could continue adding conditionals such as “r ⊃ s” and “s ⊃ t” and the inferences would be just as valid. And if we lined them all up as I have below, you can see why ancient philosophers referred to this valid argument form as a “chain argument”:

Notice how the consequent of each preceding conditional statement links up with the antecedent of the next conditional statement in such a way as to create a chain. The chain could be as long as we liked, but the rule that we will cite in our proofs only connects two different conditional statements together. As before, it is important to realize that any argument with this same form is a valid argument. For example,

- (A v B) ⊃ ~D

- ~D ⊃ C

- ∴ (A v B) ⊃ C

Notice that the consequent of the first premise and the antecedent of the second premise are exactly the same term, “~D”. That is what allows us to “link” the antecedent of the first premise and the consequent of the second premise together in a “chain” to infer the conclusion. Being able to recognize the forms of these inferences is an important skill that you will have to become proficient at in order to do proofs.

The next four forms of inference we will introduce utilize conjunction, disjunction and negation in different ways. We will start with the rule called “simplification,” which has the following form:

- p ⋅ q

- ∴ p

What this rule says, in words, is that if we have asserted a conjunction then we are entitled to infer either one of the conjuncts. This is the rule that I introduced in the first section of this chapter. It is a pretty “obvious” rule—so obvious, in fact, that we might even wonder why we have to state it. However, every form of inference that we will introduce in this section should be obvious—that is the point of calling them basic forms of inference. They are some of the simplest forms of inference, whose validity should be transparently obvious. The idea of a proof is that although the inference being made in the argument is not obvious, we can break that inference down in steps, each of which is obvious. Thus, the obvious inferences ultimately justify the non-obvious inference being made in the argument. Those obvious inferences thus function as rules that we use to justify each step of the proof. Simplification is a prime example of one of the more obvious rules.

As before, it is important to realize that any inference that has the same form as simplification is a valid inference. For example,

- (A v B) ⋅ ~(C ⋅ D)

- ∴ (A v B)

is a valid inference because it has the same form as simplification. That is, line 1 is a conjunction (since the dot is the main operator of the sentence) and line 2 is inferring one of the conjuncts of that conjunction in line (Just think of the “A v B” as the “p” and the “~(C ⋅ D)” as the “q”.)

The next rule we will introduce is called “conjunction” and is like the reverse of simplification. (Don’t confuse the rule called conjunction with the type of complex proposition called a conjunction.) Conjunction has the following form:

- p

- q

- ∴ p ⋅ q

What this rule says, in words, is that if you have asserted two different propositions, then you are entitled to assert the conjunction of those two propositions. As before, it is important to realize that any inference that has the same form as conjunction is a valid inference. For example,

- A ⊃ B

- C v D

- ∴ (A ⊃ B) ⋅ (C v D)

is a valid inference because it has the same form as conjunction. We are simply conjoining two propositions together; it doesn’t matter whether those propositions are atomic or complex. In this case, of course, the propositions we are conjoining together are complex, but as long as those propositions have already been asserted as premises in the argument (or derived by some other valid form of inference), we can conjoin them together into a conjunction.

The next form of inference we will introduce is called “disjunctive syllogism” and it has the following form:

- p v q

- ~p

- ∴ q

In words, this rule states that if we have asserted a disjunction and we have asserted the negation of one of the disjuncts, then we are entitled to assert the other disjunct. Once you think about it, this inference should be pretty obvious. If we are taking for granted the truth of the premises—that either p or q is true; and that p is not true—then is has to follow that q is true in order for the original disjunction to be true. (Remember that we must assume the premises are true when evaluating whether an argument is valid.) If I assert that it is true that either Bob or Linda stole the diamond, and I assert that Bob did not steal the diamond, then it has to follow that Linda did. That is a disjunctive syllogism. As before, any argument that has this same form is a valid argument. For example,

- ~A v (B ⋅ C)

- ~~A

- ∴ B ⋅ C

is a valid inference because it has the same form as disjunctive syllogism. The first premise is a disjunction (since the wedge is the main operator), the second premise is simply the negation of the left disjunct, “~A”, and the conclusion is the right disjunct of the original disjunction. It may help you to see the form of the argument if you treat “~A” as the p and “B ⋅ C” as the q. Also notice that the second premise contains a double negation. Your English teacher may tell you never to use double negatives, but as far as logic is concerned, there is absolutely nothing wrong with a double negation. In this case, our left disjunct in premise 1 is itself a negation, while premise 2 is simply a negation of that left disjunct.

The next rule we’ll introduce is called “addition.” It is not quite as “obvious” a rule as the ones we’ve introduced above. However, once you understand the conditions under which a disjunction is true, then it should be obvious why this form of inference is valid. Addition has the following form:

- p

- ∴ p v q

What this rule says, in words, is that that if we have asserted some proposition, p, then we are entitled to assert the disjunction of that proposition p and any other proposition q we wish. Here’s the simple justification of the rule. If we know that p is true, and a disjunction is true if at least one of the disjuncts is true, then we know that the disjunction p v q is true even if we don’t know whether q is true or false. Why? Because it doesn’t matter whether q is true or false, since we already know that p is true. The hardest thing to understand about this rule is why we would ever want to use it. The best answer I can give you for that right now is that it can help us out when doing proofs.3

As before, is it important to realize that any argument that has this same form, is a valid argument. For example,

- A v B

- ∴ (A v B) v (~C v D)

is a valid inference because it has the same form as addition. The first premise asserts a statement (which in this case is complex—a disjunction) and the conclusion is a disjunction of that statement and some other statement. In this case, that other statement is itself complex (a disjunction). But an argument or inference can have the same form, regardless of whether the components of those sentences are atomic or complex. That is the important lesson that I have been trying to drill in in this section.

The final of our 8 valid forms of inference is called “constructive dilemma” and is the most complicated of them all. It may be most helpful to introduce it using an example. Suppose I reasoned thus:

The killer is either in the attic or the basement. If the killer is in the attic then he is above me. If the killer is in the basement then his is below me. Therefore, the killer is either _________________ or _________________.

Can you fill in the blanks with the phrases that would make this argument valid? I’m guessing that you can. It should be pretty obvious. The conclusion of the argument is the following:

The killer is either above me or below me.

That this argument is valid should be obvious (can you imagine a scenario where all the premises are true and yet the conclusion is false?). What might not be as obvious is the form that this argument has. However, you should be able to identify that form if you utilize the tools that you have learned so far. The first premise is a disjunction. The second premise is a conditional statement whose antecedent is the left disjunct of the disjunction in the first premise. And the third premise is a conditional statement whose antecedent is the right disjunct of the disjunction in the first premise. The conclusion is the disjunction of the consequents of the conditionals in premises 2 and 3. Here is this form of inference using symbols:

- p v q

- p ⊃ r

- q ⊃ s

- ∴ r v s

We have now introduced each of the 8 forms of inference. In the next section I will walk you through some basic proofs that utilize these 8 rules.

Exercise

Fill in the blanks with the valid form of inference that is being used and the lines the inference follows from. Note: the conclusion is written to the right of the last premise, following the “/∴“ symbols.

Example 1:

1. M ⊃ ~N

2. M

3. H ⊃ N /∴ ~H

4. ~N Modus ponens, 1, 2

5. ~H Modus tollens, 3, 4

Example 2:

1. A v B

2. C ⊃ D

3. A ⊃ C

4. ~D /∴ B

5. A ⊃ D Hypothetical syllogism, 3, 2

6. ~A Modus tollens, 5, 4

7. B Disjunctive syllogism, 1, 6

# 1

1. A ⋅ C /∴ (A v E) ⋅ (C v D)

2. A _________________

3. C _________________

4. A v E _________________

5. C v D _________________

6. (A v E) ⋅ (C v D) ______________

# 2

1. A ⊃ (B ⊃ D)

2. ~D

3. D v A /∴ ~B

4. A _________________

5. B ⊃ D _________________

6. ~B _________________

# 3

1. A ⊃ ~B

2. A v C

3. ~~B ⋅ D /∴ C

4. ~~B _________________

5. ~A _________________

6. C _________________

#4

1. A ⊃ B

2. A ⋅ ~D

3. B ⊃ C /∴ C ⋅ ~D

4. A _________________

5. A ⊃ C _________________

6. C _________________

7. ~D _________________

8. C ⋅ ~D _________________

#5

1. C

2. A ⊃ B

3. C ⊃ D

4. D ⊃ E /∴ E v B

5. C ⊃ E _________________

6. C v A _________________

7. E v B _________________

#6

1. (A v M) ⊃ R

2. (L ⊃ R) ⋅ ~R

3. ~(C ⋅ D) v (A v M) /∴ ~(C ⋅ D)

4. ~R _______________

5. ~(A v M) _______________

6. ~(C ⋅ D) _______________

#7

1. (H ⋅ K) ⊃ L

2. ~R ⋅ K

3. K ⊃ (H v R) /∴ L

4. K _________________

5. H v R _________________

6. ~R _________________

7. H _________________

8. H ⋅ K _________________

9. L _________________

#8

1. C ⊃ B

2. ~D ⋅ ~B

3. (A ⊃ (B ⊃ C)) v D

4. A v C /∴ B ⊃ C

5. ~D _________________

6. A ⊃ (B ⊃ C) _____________

7. ~B _________________

8. ~C __________________

9. A __________________

10. B ⊃ C __________________

11. ~B __________________

12. (B ⊃ C) v B __________________

3 A better answer is that we need this rule in order to make this set of rules that I am presenting a sound a complete set of rules. That is, without it there would be arguments that are valid but that we aren’t able to show are valid using this set of rules. In more advanced areas of logic, such as metalogic, logicians attempt to prove things about a particular system of logic, such as proving that the system is sound and complete.